HPC jobs

This section outlines useful best practices to consider when coding and running applications and pipelines on an HPC.

Job scheduler

To execute jobs in most HPC environments, you must first submit them to a queueing system. This system ensures that compute resources are allocated fairly and efficiently among users while scheduling the execution of jobs. Some of the most common queueing systems operate using SLURM (utilizing s-type commands), TORQUE (utilizing q-type commands), and Moab (utilizing m-type commands).

| Program | link |

|---|---|

| TORQUE qsub documentation | Link |

| Moab msub documentation | Link |

| SLURM sbatch/Srun documentation | sbatch and srun |

We will focus on SLURM (Simple Linux Utility for Resource Management), a widely used open-source job scheduler designed to manage and allocate resources in high-performance computing (HPC) clusters. It efficiently schedules and runs batch jobs, handles job queues and optimizes resource utilization across multiple users and tasks.

Submitting Jobs using ‘sbatch’

It is ideal for running programs non-interactively, typically for tasks that require more time than a brief interactive session. A batch script includes:

- The requested resources

- A sequence of commands to execute

Useful SLURM commands:

# Submit the job

sbatch

# This will show you the status of your job, including its current state, the amount of time it has been running, and the amount of resources it is currently using.

squeue -j job_id

# Cancel a job

scancel job_id1, job_id2Here is example of a bash script to submit to the queueing system:

mybash.sh

#!/bin/bash

#SBATCH --job-name=myjob

#SBATCH --output=myjob.out

#SBATCH --error=myjob.err

#SBATCH -D /home/USERNAME/ # working directory

#SBATCH --cpus-per-task 1 # number of CPUs. Commonly default: 1

#SBATCH --time 00:10:00 # time for the job HH:MM:SS.

#SBATCH --mem=1G # RAM memory

##SBATCH --array=1-10%4 # this directive submits a job array of 10 tasks, but only 4 of them can run concurrently. Alternative: #SBATCH --ntasks=1

# Activate environment

eval "$(conda shell.bash hook)"

conda activate bam_tools

# My commands: software, pipeline, etc.

snakemake -j1

exit 0The first line of the script (#!/bin/bash) tells the system that this is a bash script. The remaining lines starting with #SBATCH are directives for ‘sbatch’ that specify various options for the job.

Finally, we can submit your job to the queueing system:

{.bash filename=""Terminal} sbatch mybash.sh

Submitted batch job 39712574Check details about a specific job (e.g. 39712574) using the following command:

jobinfo 39712574You can also include email notifications in your bash script by adding the following options:

#SBATCH --mail-type=begin # send email when job begins

#SBATCH --mail-type=end # send email when job ends

#SBATCH --mail-type=fail # send email if job fails

#SBATCH --mail-user=your mail addressTo monitor the job’s output in real-time, refresh the last few lines of its log file using:

watch tail align.sh-39712574.outTo view the entire log file (not in real-time), you can check it anytime with:

less -S align.sh-39712574.outReviewing the log files is helpful for debugging, especially when a command encounters an error and causes the job to terminate prematurely.

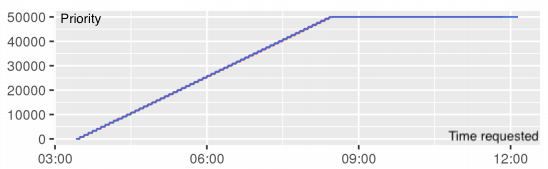

In the figure below, you can see how the priority assigned to a SLURM job decreases as the requested time increases, while keeping memory and CPU resources constant. Higher values indicate lower priority.

| Description | Links |

|---|---|

| Slurm official guide | Quick start |

| Slurm cheat sheet | Cheat Sheet |

| Slurm Universities usage examples | GenomeDK and Princeton guides |

| Gwf, a simple python tool to create interdependent job submissions | Gwf, developed at the University of Aarhus, makes it easy to create Slurm jobs and organize them as a pipeline with dependencies, using the python language (you need python 3.5+). You get to simply create the shell scripts and the dependencies, without the complicating syntax of Slurm. The page contains also a useful guide. |

Job parallelization

Job parallelization is crucial for achieving high performance and running jobs effectively on an HPC. Here are two key scenarios where it is particularly important:

- Independent computational tasks: When tasks are independent of each other, job parallelization can enhance efficiency by allowing them to run concurrently.

- Multi-threaded tools: Some tools are specifically designed to perform parallel computations through multi-threading, enabling them to utilize multiple CPU cores for increased performance.

Jobs arrays -a

Interactive jobs

Use this option when you need to execute tasks that don’t require automation or repetitive scheduling. You can work interactively, monitor progress, and adjust commands as needed in real-time. Examples:

- Splitting a BAM file by chromosome for further analysis.

- Running exploratory or simple statistical analysis in Python or R.

- Compressing or decompressing multiple files in parallel required as input for a pipeline.

Keep in mind, that once you exit the session, any processes running within it will stop automatically. This makes it ideal for tasks that require manual intervention or those that don’t need continuous execution after logging out.

How do interactive jobs work?

The queuing system schedules your job based on the resources you request (such as CPU, memory, and time requirements) and the current workload of the nodes. Once a node is assigned, the requested resources will be available to you, and the node’s name will appear in your terminal prompt.

Slurm provides the ‘srun -pty bash’ command to submit interactive jobs on a compute node. Specify the resource requirements by including one or more of the following options:

--cpus-per-task: Number of CPUs per task--mem: Memory per node (e.g.,--mem=4G)--time: Time limit for the job (e.g.,--time=2:00:00for 2 hours)

Terminal

# Slurm example

srun --mem=<GB_of_RAM>g -c <n_cores> --time=<days-h:min:sec> --pty /bin/bashFor example, to request 2 CPUs, 4 GB of memory, and 1 hour wall time, use the following command:

slurm example

srun --cpus-per-task=2 --mem=4G --time=1:00:00 --pty bashEfficient resource usage

Managing a large number of short jobs

Each time a job is submitted to the job manager (e.g., SLURM) in a computing cluster, there’s a time overhead required for resource allocation, output preparation, and queue organization. To minimize this overhead, it’s often more efficient to group tasks into longer jobs when possible.

However, it’s important to strike a balance. If jobs are too long and encounter an issue, significant time and resources can be lost. This risk can be mitigated by tracking outputs at each stage, ensuring you only rerun the necessary portions of the job. For example, by checking whether a specific output already exists, you can prevent redundant computations and reduce wasted effort.

A particularly powerful feature in queue systems like SLURM is batch arrays. These allow you to automate running large numbers of similar jobs. A batch array consists of multiple jobs with identical code and parameters but with different input files. Each job in the array is assigned a unique index, passed as an argument to the job script. This greatly simplifies managing and executing large-scale tasks.

| Description | Links |

|---|---|

| SLURM tutorial on job arrays | Job Array |

| SLURM cheat sheet | Cheat Sheet |

| SLURM guide | Quick start |

Workflow managers can also assist in automating and tracking jobs, ensuring that resources are efficiently allocated while reducing overhead and preventing errors in complex workflows.

Managing large STOUT outputs

Minimize the amount of information printed to the standard output (STDOUT) to avoid overwhelming the terminal screen. Excessive outputs can become problematic, especially when numerous parallel jobs are running, potentially cluttering the home directory and leading to errors or data loss. Instead, consider directing outputs to software-specific data formats (like .RData files for R) or, at the very least, to plain text files. This approach helps maintain a clean workspace and reduces the risk of encountering issues related to excessive STDOUT content.

Queueing system best practices

- Avoid running jobs or scripts on the login nodes.

- Submit batch jobs using the

sbatchcommand and ensure that your submission scripts primarily consist of queueing system parameters and job executions. - Introduce a delay between job submissions when submitting multiple jobs to prevent overwhelming the system.

- Utilize job arrays for submitting multiple identical jobs efficiently.

- Use interactive sessions for testing and interactive jobs.

- Incorporate software modules in your pipelines for improved environment control.

- Estimate resource requirements before submitting jobs, including CPU, memory, and time, to optimize resource usage. Always test your code with a small, representative sample (toy example) to ensure the pipeline functions correctly before running larger jobs.