ln -sf ../Data

mkdir -p Results/GWAS5Association testing

Biometric model

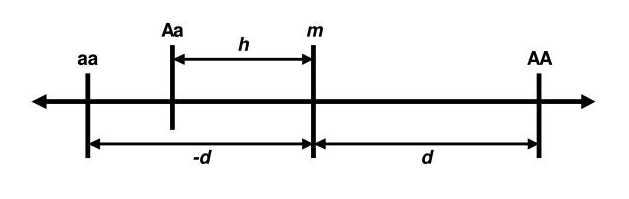

The theory behind genetic association is rooted in the biometrical model, first established by Fisher. According to this model, each genetic variant has an additive effect on a trait’s value. For a specific variant and its genotypes, each genotype contributes differently to the trait. For example, in a two-allele system (A and a), the effects of the three genotypes (AA, Aa, a) are defined by two parameters: d (twice the difference between the homozygotes, AA and aa) and h (the effect of the heterozygote, Aa). The mean effect of the homozygotes is m. These parameters, d and h, are called genotypic effects (Neale and Cardon 2013).

Example

Imagine that we are talking about genes that influence adult stature. Let us assume that the normal range for males is from 1.47m to 2m: that is, about 0.56m. Let’s assume that each somatic chromosome has one gene of roughly equivalent effect. Then, in each locus, the homozygotes contribute ±d=±0.28 cm (from the midpoint, m), depending on whether they are AA, the increasing homozygote, or aa, the decreasing homozygote. While some loci may have larger effects, others are likely to have smaller contributions. This model suggests that the effect of any given gene is subtle and difficult to detect using classical genetic methods.

In GWAS, linear models have been typically used for continuous phenotypes (e.g. BMI, height, blood pressure) and logistic models for binary traits (e.g. disease presence). These models account for fixed effects (such as genotype) but also need to consider random effects, represented as an error term, \(e\), to minimize the influence of covariates, like sex of population structure.

Linear mixed models (LMMs) are increasingly popular as an alternative to standard linear models and have proven to be effective for analyzing complex traits. They adjust for confounding factors such as population stratification, family structure, and cryptic relatedness, resulting in more reliable test statistics. However, they are usually more computationally demanding.

Modelling

The linear regression model

The basic linear regression model is written as:

\[y = G\beta_G + X\beta_X + \epsilon\]

Here, \(y\) is the phenotype vector, \(G\) is the genotype/dosage matrix (the allele counts) for the current variant, \(X\) is the fixed-covariate matrix, and \(e\) is the error term subject to least-squares minimization.

PLINK supports SNP-trait association testing for both quantitative and binary traits:

-assoc: It performs chi-square tests for binary traits. For quantitative traits, it uses asymptotic methods (likelihood ratio test or Wald test) or empirical significance values. Covariates are not supported. It will automatically treat outcomes as quantitative when the phenotype column contains values other than 1, 2, 0, or missing.--linear: Fits linear regression models for quantitative traits, allowing covariates.--logistic: Fits or Firth logistic regression models for binary traits, supporting covariates and SNP-covariate interactions.

Both --linear and --logistic are more flexible than --assoc but may run slower. Further details are available in PLINK’s documentation. For detailed information on PLINK’s association methods, visit PLINK Association Analysis PLINK 1.9 or PLINK 2.0. Note that command options differ between versions.

PLINK in association testing

PLINK performs one degree of freedom (1 df) chi-square allelic test in which the trait value, or the log‐odds of a binary trait, increases or decreases linearly as a function of the number of risk alleles. This basic biallelic test will compare the frequency of the alleles in cases vs. controls. All models are tests for the minor allele a: * allelic association test, 1 df: a vs. A

In addition, non‐additive tests are available: * dominant gene action test, 1 df: (aa & Aa) vs AA * recessive gene action test, 1 df: aa vs (Aa & AA) * genotypic association test, 2 df: aa vs Aa vs AA

However, non‐additive tests are not widely applied, because the statistical power to detect non‐additivity is low in practice. More complex analyses (e.g., Cox regression analysis) can be performed by using R‐based “plug‐in” functions in PLINK.

Analysis with PLINK

![]() Switch to the Bash kernel.

Switch to the Bash kernel.

We link the data folder again and create a folder for output files.

Recall that our dataset contains binary traits: phenotype can take two values, 0 or 1. In this tutorial, we will apply both --assoc and --logistic to our data. A reminder that the -assoc option does not allow for population stratification correction using covariates such as principal components (PCs)/MDS components, making it less suited for association analyses. However, we will look at the results for educational purposes.

We start with --assoc:

plink --bfile Results/GWAS4/HapMap_3_r3_9 --assoc --out Results/GWAS5/assoc_resultsPLINK v1.90b6.21 64-bit (19 Oct 2020) www.cog-genomics.org/plink/1.9/

(C) 2005-2020 Shaun Purcell, Christopher Chang GNU General Public License v3

Logging to Results/GWAS5/assoc_results.log.

Options in effect:

--assoc

--bfile Results/GWAS4/HapMap_3_r3_9

--out Results/GWAS5/assoc_results

385567 MB RAM detected; reserving 192783 MB for main workspace.

1073226 variants loaded from .bim file.

109 people (55 males, 54 females) loaded from .fam.

109 phenotype values loaded from .fam.

Using 1 thread (no multithreaded calculations invoked).

Before main variant filters, 109 founders and 0 nonfounders present.

Calculating allele frequencies... done.

Total genotyping rate is 0.998028.

1073226 variants and 109 people pass filters and QC.

Among remaining phenotypes, 54 are cases and 55 are controls.

Writing C/C --assoc report to Results/GWAS5/assoc_results.assoc ...

done.Let’s have a look at the association output file:

head -5 Results/GWAS5/assoc_results.assoc CHR SNP BP A1 F_A F_U A2 CHISQ P OR

1 rs3131972 742584 A 0.1944 0.1455 G 0.9281 0.3354 1.418

1 rs3131969 744045 A 0.1759 0.1 G 2.647 0.1037 1.921

1 rs1048488 750775 C 0.1981 0.1455 T 1.054 0.3045 1.451

1 rs12562034 758311 A 0.06481 0.1182 G 1.863 0.1723 0.5171 where the columns represent:

CHRChromosome code

SNPVariant identifier

BPBase-pair coordinate

A1Allele 1 (usually minor)

F_AAllele 1 frequency among cases

F_UAllele 1 frequency among controls

A2Allele 2

CHISQAllelic test chi-square statisticPAllelic test p-value. The p-value indicates the probability that the observed association (or one more extreme) would occur by chance if there is no true association between the SNP and the trait.ORodds(allele 1 | case) / odds(allele 1 | control)

Next, we use the --logistic and --covar options to provide the MDS components covar_pca.txt as covariates (from the previous tutorial). We will remove rows with NA values from the output to prevent issues during plotting.

# --logistic

# Note, we use the option --hide-covar to only show the additive results of the SNPs in the output file.

plink --bfile Results/GWAS4/HapMap_3_r3_9 \

--covar Results/GWAS4/covar_pca.txt \

--logistic hide-covar \

--out Results/GWAS5/logistic_results

# Remove NA values, as they might give problems generating plots in later steps.

awk '!/'NA'/' Results/GWAS5/logistic_results.assoc.logistic > Results/GWAS5/logistic_results.assoc_2.logisticPLINK v1.90b6.21 64-bit (19 Oct 2020) www.cog-genomics.org/plink/1.9/

(C) 2005-2020 Shaun Purcell, Christopher Chang GNU General Public License v3

Logging to Results/GWAS5/logistic_results.log.

Options in effect:

--bfile Results/GWAS4/HapMap_3_r3_9

--covar Results/GWAS4/covar_pca.txt

--logistic hide-covar

--out Results/GWAS5/logistic_results

385567 MB RAM detected; reserving 192783 MB for main workspace.

1073226 variants loaded from .bim file.

109 people (55 males, 54 females) loaded from .fam.

109 phenotype values loaded from .fam.

Using 1 thread (no multithreaded calculations invoked).

--covar: 20 covariates loaded.

Before main variant filters, 109 founders and 0 nonfounders present.

Calculating allele frequencies... done.

Total genotyping rate is 0.998028.

1073226 variants and 109 people pass filters and QC.

Among remaining phenotypes, 54 are cases and 55 are controls.

Writing logistic model association results to

Results/GWAS5/logistic_results.assoc.logistic ... done.In addition to the columns (CHR, SNP, BP, A1, OR, and P) also present in the --assoc output, new columns include:

TEST: type of test performed. It usually includes “ADD” (additive model) but may also include other genetic models depending on the options specified (e.g., dominant, recessive).NMISS: number of non-missing observations (individuals) used in the analysis for that particular SNP.STAT: the test t-statistic for the logistic regression test

head -5 Results/GWAS5/logistic_results.assoc.logistic CHR SNP BP A1 TEST NMISS OR STAT P

1 rs3131972 742584 A ADD 109 NA NA NA

1 rs3131969 744045 A ADD 109 NA NA NA

1 rs1048488 750775 C ADD 108 NA NA NA

1 rs12562034 758311 A ADD 109 NA NA NAThe results of these GWAS analyses will be visualized in the final step, highlighting any genome-wide significant SNPs in the dataset.

For quantitative traits the option --logistic should be replaced by --linear. The logistic regression is used for binary trait (e.g. disease status: 1 = affected, 0 = unaffected) and gives the Odds Ratio at each SNP for the two trait values. Linear regression is for continuous phenotypes (e.g., height, blood pressure, gene expression levels) and makes a linear regression test.

Correction for multiple testing

Modern genotyping arrays can test up to 4 million markers, increasing the risk of false positives due to multiple testing. While a single comparison has a low error rate, analyzing millions of markers amplifies this risk. Common methods to address this include:

- Bonferroni Correction: Adjusts the p-value threshold by dividing \(0.05\) by the number of tests, controlling false positives. It controls the probability of at least one false positive but may be overly strict due to SNP correlation caused by linkage disequilibrium (hence, increased FN).

- False Discovery Rate (FDR): Minimizes the proportion of false positives among significant results (specified threshold) being less conservative than Bonferroni. However, it assumes SNP independence, which may not hold with LD.

- Permutation Testing: Randomizes outcome labels to generate an empirical null distribution, enabling robust p-value adjustments. This process is repeated extensively (often millions of times) to eliminate true associations. It is computationally heavier.

To learn more about statistical testing and false positives, look at this online book chapter.

Examining PLINK --assoc output

Before executing PLINK’s command for multiple testing, answer the questions below.

The Bonferroni-corrected p-value threshold is calculated using an initial threshold of 0.05.

- Determine the new BF threshold for this dataset.

- How many SNPs in your dataset appear to be significantly associated with the phenotype using this threshold, and how many would be considered significant without the multiple testing correction?

Hint:

- Apply the formula: bonferroni_threshold <- alpha / num_snps. You can get the number of SNPs from

Results/GWAS4/HapMap_3_r3_9.bim. Use R.

- Apply the formula: bonferroni_threshold <- alpha / num_snps. You can get the number of SNPs from

- Look at the P-values from the association test in column 9 (

Results/GWAS5/assoc_results.assoc), how many SNPs would pass the two different thresholds?

- Look at the P-values from the association test in column 9 (

# Write code here and change kernel accordingly - BF P-value: \(4.7e-08\).

- 55433 SNPs are significant with \(0.05\) cutoff and 0 would pass the threshold \(4.7e-08\).

wc -l Results/GWAS4/HapMap_3_r3_9.bim1073226 Results/GWAS4/HapMap_3_r3_9.bim![]() Switch to the R kernel.

Switch to the R kernel.

result <- 0.05 / 1073788

format(result, scientific = TRUE, digits = 2)![]() Switch to the Bash kernel.

Switch to the Bash kernel.

awk '$9 < 0.05' Results/GWAS5/assoc_results.assoc | wc -l 55411awk '$9 < 4.7e-08' Results/GWAS5/assoc_results.assoc | wc -l 0PLINK Commands

We know that no SNPs would pass the strict BF cutoff, is this also the case for other correction approaches?

Adjustment for multiple testing

PLINK’s --adjust option will generate a file containing the adjusted significance values for several multiple correction approaches, saved to Results/GWAS5/adjusted_assoc_results.assoc.adjusted:

# --adjust

plink --bfile Results/GWAS4/HapMap_3_r3_9 \

--assoc \

--adjust \

--out Results/GWAS5/adjusted_assoc_results \

--silentNow, let’s look at the output file from PLINK

head -n5 Results/GWAS5/adjusted_assoc_results.assoc.adjusted CHR SNP UNADJ GC BONF HOLM SIDAK_SS SIDAK_SD FDR_BH FDR_BY

3 rs1097157 1.66e-06 1.861e-06 1 1 0.8316 0.8316 0.2871 1

3 rs1840290 1.66e-06 1.861e-06 1 1 0.8316 0.8316 0.2871 1

8 rs279466 2.441e-06 2.727e-06 1 1 0.9272 0.9272 0.2871 1

8 rs279460 2.441e-06 2.727e-06 1 1 0.9272 0.9272 0.2871 1 which contains the fields:

CHRChromosome numberSNPSNP identifierUNADJUnadjusted p-valueGCGenomic-control corrected p-valuesBONFBonferroni single-step adjusted p-valuesHOLMHolm (1979) step-down adjusted p-valuesSIDAK_SSSidak single-step adjusted p-valuesSIDAK_SDSidak step-down adjusted p-valuesFDR_BHBenjamini & Hochberg (1995) step-up FDR controlFDR_BYBenjamini & Yekutieli (2001) step-up FDR control

The Bonferroni correction for all SNPs (BONF) gives a value of 1, indicating that the SNP is not significantly associated with the phenotype based on the threshold 0.05/n. As mentioned earlier, this suggests that the method might be too conservative, potentially leading to a high number of false negatives (Type II errors).

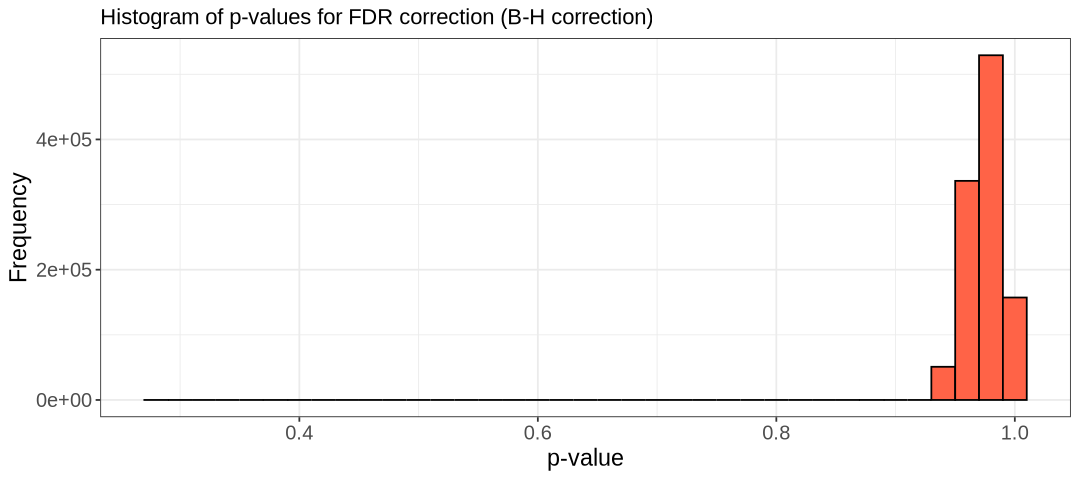

However, this is not the case for the Benjamini & Hochberg method of FDR (FDR-BH).

![]() Switch to the R kernel.

Switch to the R kernel.

In the code below, we will plot the distribution of FDR-BH values to illustrate this.

suppressMessages(suppressWarnings(library(ggplot2)))

options(repr.plot.width = 9, repr.plot.height = 4)

tests <- read.table("Results/GWAS5/adjusted_assoc_results.assoc.adjusted", header=T)

hist.relatedness <- ggplot(tests, aes(x=FDR_BH)) +

geom_histogram(binwidth = 0.02, col = "black", fill="tomato") +

labs(title = "Histogram of p-values for FDR correction (B-H correction)") +

xlab("p-value") +

ylab("Frequency") +

theme_bw() +

theme(axis.title=element_text(size=14), axis.text=element_text(size=12))

show(hist.relatedness)

# Write code here

In the R code above, use the summary() to get an overview of the distribution of FDR-BH values.

- What is the minimum FDR-adjusted p-value? and the mean FDR-adjusted p-value?

- Are there any SNPs significantly associated with the phenotype after applying genome-wide correction?

# Write your answer hereIn R:

summary(tests$FDR_BH) Min. 1st Qu. Median Mean 3rd Qu. Max.

0.2871 0.9646 0.9742 0.9709 0.9742 1.0000 The black line shows very few values with an FDR-adjusted p-value below ~0.95, with the lowest around 0.29 (as shown in the summary table). Therefore, no variants are significant at the 0.05 level after genome-wide correction.

Permutation

Let’s perform a permutation correction. This is a computationally intensive approach, so we will run it on a subset of the data to reduce the computational time (e.g. chr 22). We need the option --mperm to define how many permutations we want to do.

First, we generate a subset of the data:

awk '{ if ($1 == 22) print $2 }' Results/GWAS4/HapMap_3_r3_9.bim > Results/GWAS5/subset_snp_chr_22.txt

plink --bfile Results/GWAS4/HapMap_3_r3_9 \

--extract Results/GWAS5/subset_snp_chr_22.txt \

--make-bed \

--out Results/GWAS5/HapMap_subset_for_perm \

--silentThen, we perform 100K permutations (usually you would do more):

plink --bfile Results/GWAS5/HapMap_subset_for_perm \

--assoc \

--mperm 1000000 \

--threads 2 \

--out Results/GWAS5/perm_result \

--silentIn the output, the EMP1 indicates the empirical p-value, and EMP2 is the corrected one. Let’s check the ordered permutation results:

sort -gk 4 Results/GWAS5/perm_result.assoc.mperm | head -n5 CHR SNP EMP1 EMP2

22 rs4821137 0.00016 0.5401

22 rs11704699 5e-05 0.7745

22 rs910541 0.000235 0.8246

22 rs4821138 0.000255 0.9061 - Are there any significant SNPs?

Hint: generate an overview of the distribution of EMP2 values (similar to what we did for FDR_BH).

# Write your code hereAgain, we do not infer any significance from the permutation correction. Let’s use the summary() function and visualize the values.

![]() Switch to the R kernel.

Switch to the R kernel.

suppressMessages(suppressWarnings(library(ggplot2)))

options(repr.plot.width = 9, repr.plot.height = 4)

tests <- read.table("Results/GWAS5/perm_result.assoc.mperm", header=T)

hist.relatedness <- ggplot(tests, aes(x=EMP2)) +

geom_histogram(binwidth = 0.02, col = "black", fill="tomato") +

labs(title = "Histogram of p-values corrected by permutation") +

xlab("p-value") +

ylab("Frequency") +

theme_bw() +

theme(axis.title=element_text(size=14), axis.text=element_text(size=14), plot.title=element_text(size=15))

show(hist.relatedness)

summary(tests$EMP2) Min. 1st Qu. Median Mean 3rd Qu. Max.

0.5401 1.0000 1.0000 0.9999 1.0000 1.0000

In summary, when correcting for multiple testing, it is crucial to remember that different correction methods have distinct properties. It is the responsibility of the investigator to choose the appropriate method and interpret the results accordingly. Generally, permutation correction is considered the gold standard in GWAS analysis, though it may not always be effective with certain statistical models Uffelmann et al. (2021), a limitation to be mindful of. Further pros and cons of this method, which can be used for association and dealing with multiple testing, are described in this article (Marees et al. 2018).

This step would also need to be performed for the logistic output; however, we will skip it in this case as there are no differences in the results.

Manhattan and QQ-plots

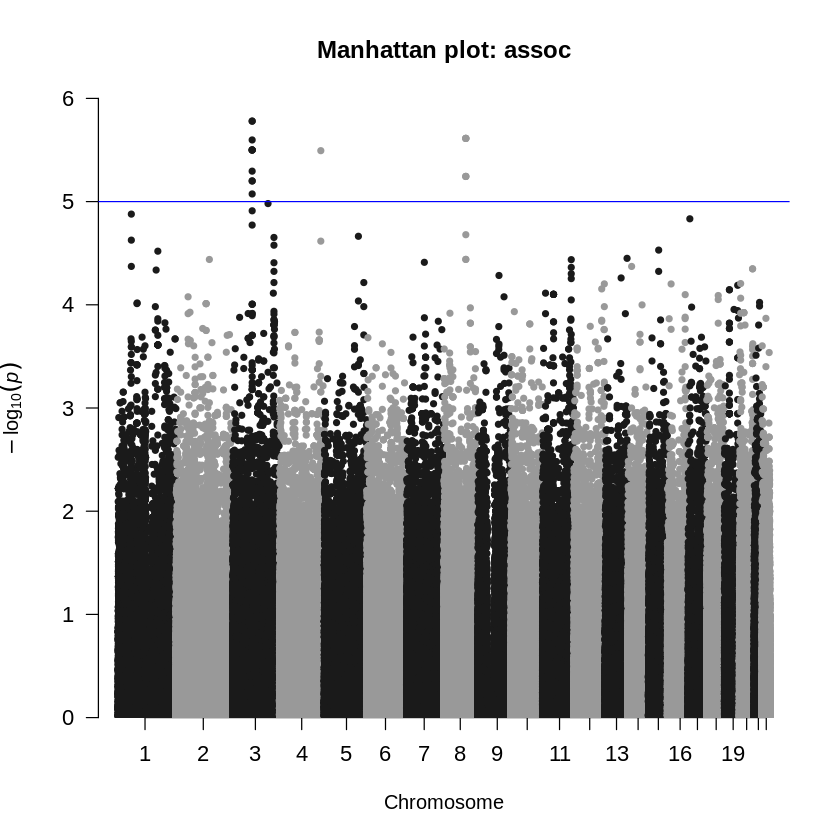

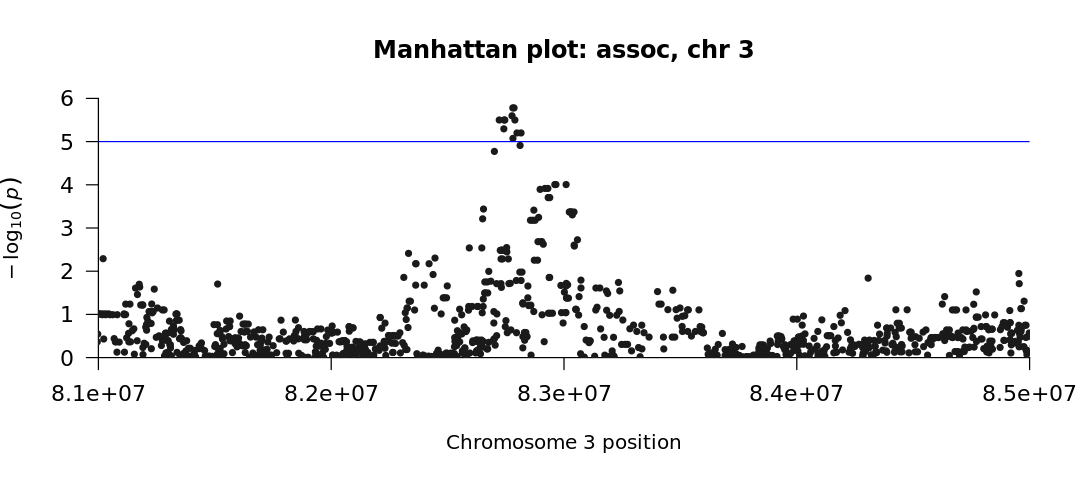

A common approach to identify high-association alleles is to plot the association results and look for peaks visually. One such method is the Manhattan plot, where each SNP is plotted against the negative log of its p-value from the association test (Gibson 2010). This can be done using the manhattan() function from the qqman package in R. To customize the plots (adjust colors, font sizes, etc.), check the package vignette here.

# Setup to avoid long messages and plot on screen

options(warn=-1)

options(jupyter.plot_mimetypes = 'image/png')

# Load GWAS package qqman

suppressMessages(library("qqman"))

# Manhattan plot using --logistic results

results_log <- read.table("Results/GWAS5/logistic_results.assoc_2.logistic", head=TRUE)

manhattan(results_log, main = "Manhattan plot: logistic", cex.axis=1.1)

# Manhattan plot using --assoc

results_as <- read.table("Results/GWAS5/assoc_results.assoc", head=TRUE)

manhattan(results_as, main = "Manhattan plot: assoc", cex.axis=1.1)

# Zoomed version on a significant region in chromosome 3

manhattan(subset(results_as, CHR==3), xlim = c(8.1e7, 8.5e7), chr="CHR",

main = "Manhattan plot: assoc, chr 3", cex.axis=1.1)

The blue line represents the threshold for significance (in the two plots, \(10^{-5}\)). We see no significant SNPs associated with the phenotype when we use the --logistic command (first plot). However, when we use the --assoc command (second plot), we obtain significant SNPs.

Why is there a difference?

Recall from the beginning of this chapter that the --assoc command does not correct for covariates. So even though we have promising (and hopefully publishable!) results, this form of analysis may be flawed by the underlying population stratification, which is taken into account with the --logistic model.

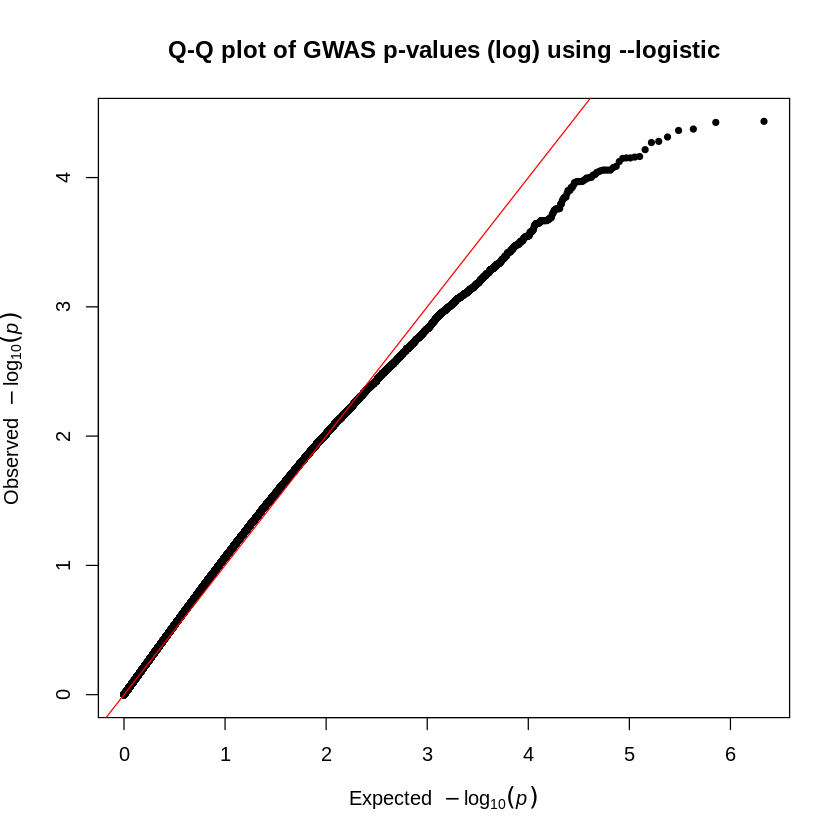

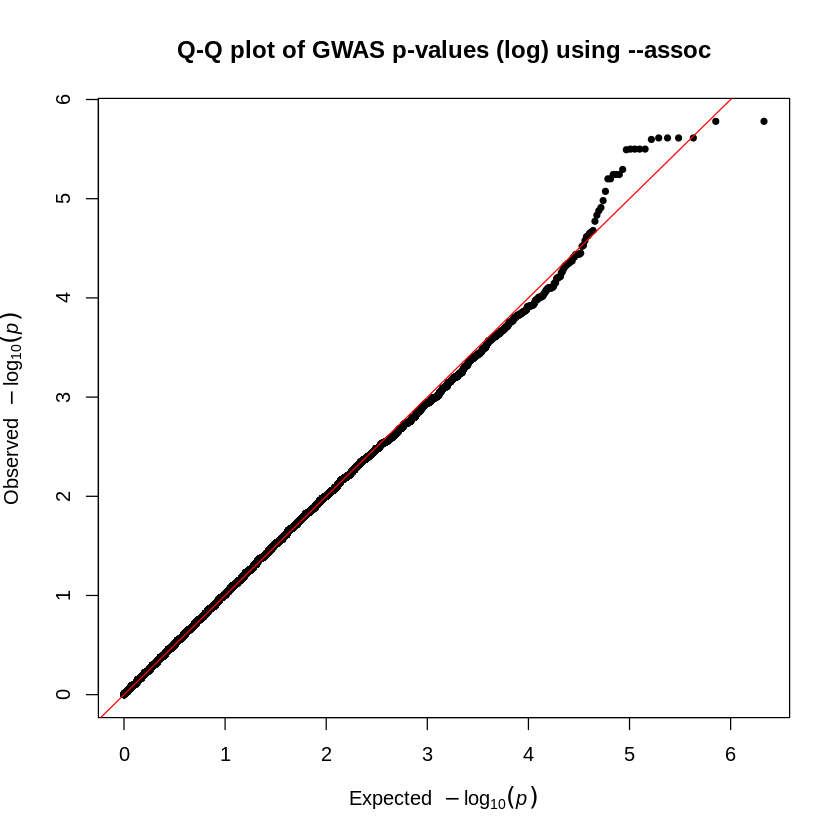

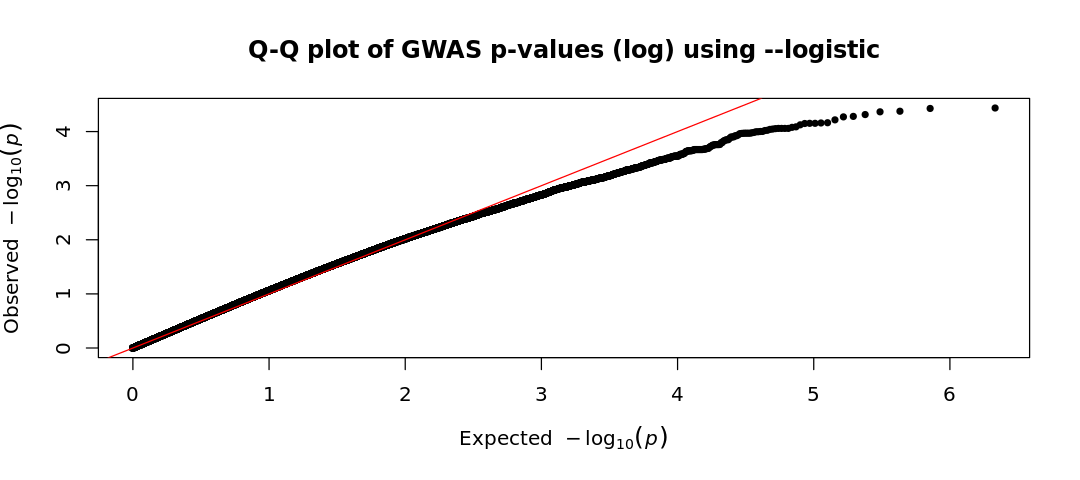

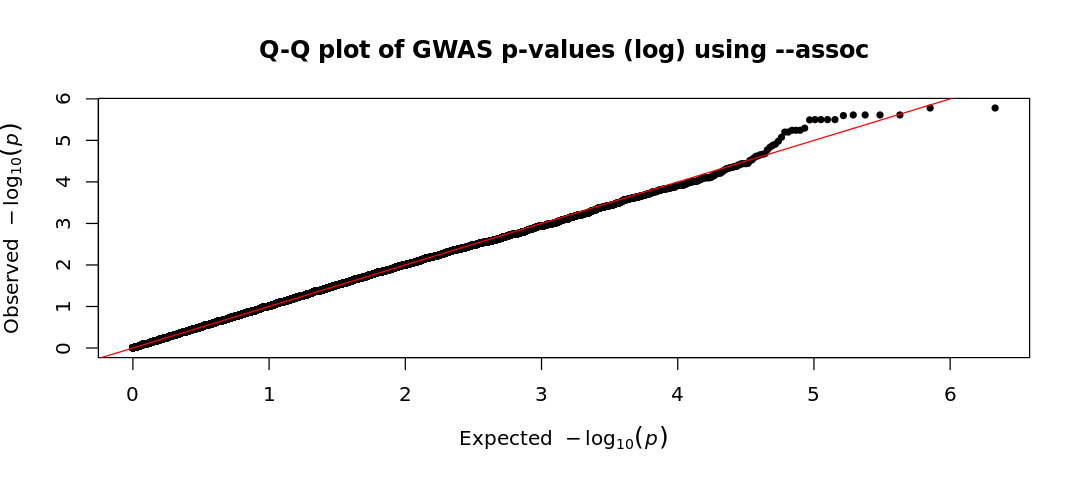

The second method of visually determining significance is to use a QQ-plot. This plots the expected \(-\log_{10}p\) value against the observed \(-\log_{10}p\) value. It’s a good way to observe not only outliers that could have significant associations but also peculiarities within our data. For example, if a plot suggests an extreme deviation between the x- and y-axes, then there might be an error with our analyses or data.

We will create these plots using the qq() function from the qqman package in R.

# Setup to avoid long messages and plot on-screen

options(warn=-1)

options(jupyter.plot_mimetypes = 'image/png')

# Install and load GWAS package qqman

suppressMessages(library("qqman"))

# QQ plot for --logistic

results_log <- read.table("Results/GWAS5/logistic_results.assoc_2.logistic", head=TRUE)

qq(results_log$P, main = "Q-Q plot of GWAS p-values (log) using --logistic")

# QQ plot for --assoc

results_as <- read.table("Results/GWAS5/assoc_results.assoc", head=TRUE)

qq(results_as$P, main = "Q-Q plot of GWAS p-values (log) using --assoc")

Given the two Q-Q plots (based on logistic regression and basic association test), how do the observed p-values compare to the expected p-values under the null hypothesis? Write a short interpretation based on your observations

Because of the relatively small sample size of the HapMap data, the genetic effect sizes in these simulations were set higher than what is typically observed in genetic studies of complex traits. In reality, detecting genetic risk factors for complex traits requires much larger sample sizes—typically in the thousands, and often in the tens or even hundreds of thousands. Finally, it is important to remember that this is a simulated dataset designed to mimic real data but remains very limited in size.

- In the first plot (

--logistic), the observed values are lower than the expected ones (consistent with what we saw in the Manhattan plot). This suggests an unexpected lack of significant findings. This could be due to overly conservative corrections, biases in the dataset, or poor study power (small sample size or very small effect sizes). - On the other hand, in the

assocQQ-plot, some SNPs show stronger associations than expected (observed p-values lower than expected), deviating from the diagonal which suggests a potential true association. Although the top-right corner typically continues deviating upward with observed values higher than expected, here it follows an unexpected pattern. However, before trusting any result, we must conduct further analyses, particularly to assess population stratification. Even if we have confidence in our association test and sufficient power to detect associated variants, careful evaluation is necessary to rule out confounding factors.

Moreover, it is important to remember that, while this suggests an association between these SNPs and the studied phenotype, there isn’t sufficient information here to determine the causal variant. In fact, there could potentially be multiple causal variants and the causal variants could be in LD with some of the significant variants. Identifying the causal variants would require further investigation using biological methods. However, this analysis has significantly reduced the number of SNPs that need to be studied.

Choose the Bash kernel

Choose the Bash kernel